The leading provider of In-Home Video EEG Testing in the United States hired Positronic in a consultative capacity to train deep learning models to diagnosis certain disease states in near real time. The stated goal of the client’s initiative is to improve the quality and data of neurophysiology studies. What follows is some information about the work we did to facilitate the goal of AI interpretation of EEGs. All of the work falls either under trade secrets or is now patent-pending. The discussion here is intentionally vague to honor NDAs and trade secrets.

LABELS

The first major challenge to overcome is convert tens of years of human-generated data into data fit for consumption by a neural net. Humans are notoriously ambiguous. For example, would you label the following paragraph, taken without alteration from an actual clinical report, as normal or abnormal?

This study is normal. There were no interictal epileptiform discharges nor seizures recorded. This continuous video/EEG monitoring is abnormal due to the intermittent slowing of a posterior dominant rhythm and rudimentary polyspike discharges. There were no seizures recorded.

Existing NLP technologies, consisting of some combination of off-the-shelf libraries (notably Stanford CoreNLP) and convolutional-based neural networks, was critically deficient at classifying the narrative structure of the clinical reports. Positronic invented a novel new approach to NLP that successfully classified these reports with a greater than 98% accuracy (audited by certified neurologists).

DATA

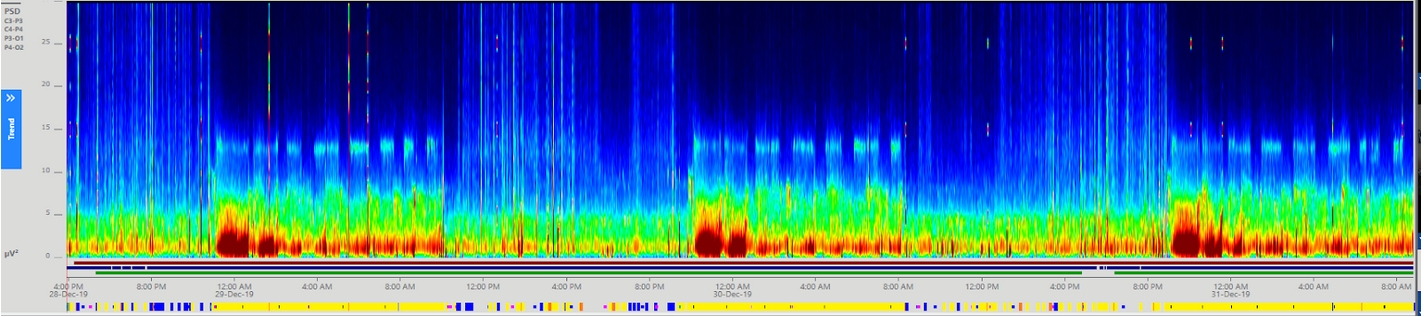

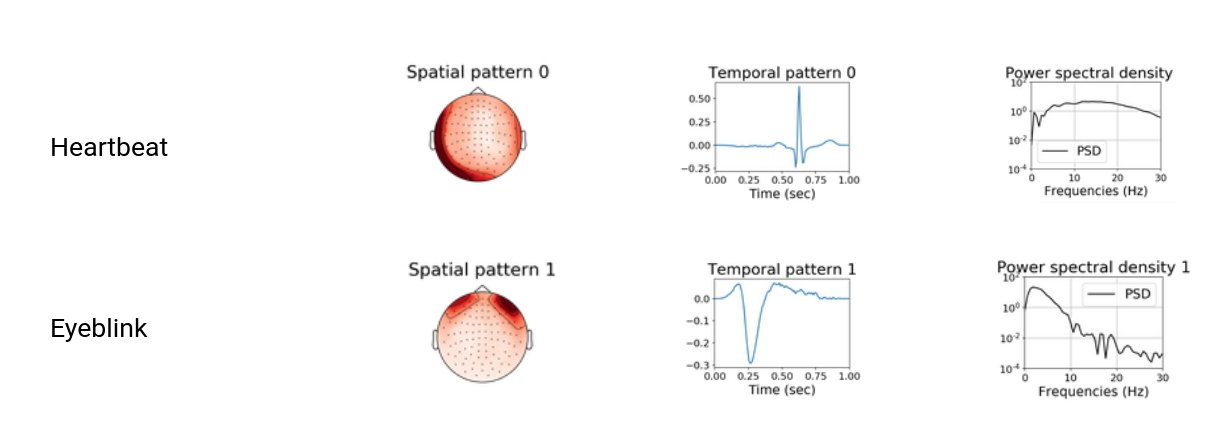

The second major challenge to overcome was the raw data itself. Consider that each sample of data is an electroencephalogram (EEG) file segment of up to 72 hours of data taken at 200hz over 19 spatially-related channels. Compounding the challenge is that the signals that provide separation are varied, sparse, and not uniformly distributed. In fact, a signal may occur in less than single second within a single channel data, meaning or put another way, only a hundred data points out of more than a billion.

COMPUTE

This third major challenge to overcome was that the compute power required to train this scale of neural net on this scale of data simply didn’t exist. In fact, we were invited by NVIDIA to train on two of their supercomputers (DGX-2) that they supplied for two months. It was their hope that they could use the success story in marketing literature for these half-million dollar machines. Over those two months even their team was unable to garner more than a 4x improvement over our more pedestrian hardware which came a tenth of the cost.

Ultimately, the solution to the problem was to develop new optimization tools, both inside and outside the architecture of the neural network itself. Applying these tools (patent-pending) resulted in 100x speed-up in processing time, making the training possible.

ARCHITECTURE

This fourth major challenge to overcome was that existing off-the-shelf neural network architectures were simply unable to learn. Positronic created 5 new novel neural network technologies, including but not limited to innovative pooling techniques, gradient descent improvements, sub-signal processing, and normalizations. All five of these technologies are going through the patent process.